It’s true that content moderation in its essence is an integral part of managing brand credibility. It is an understatement that user-generated content is an important tool for building brand recognition and trust. That said it could be mentioned that content moderation in the world of social media affects how we perceive events. Moderators are online janitors who allow others to see content that is permissible.

It is an understatement that user-generated content is an important tool for building brand recognition and trust. Ideally, platforms should be places of open expression, but also places where misinformation is removed. Evelyn Douek makes the assertion that one person’s “free speech” is another person’s harm, and this is something that needs to be considered and acted upon.

At present, there are initiatives that are launched to counter such misinformation. You have the Trust & Safety Professional Association, which is a membership organization designed to support the content moderation community. This is done by creating policies for social media platforms.

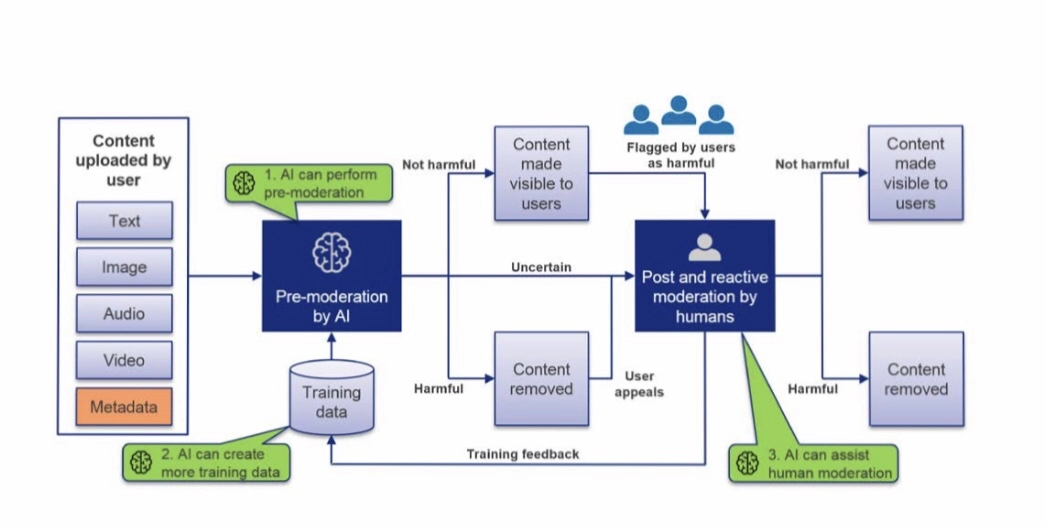

We are at an age where AI is harnessed to help in such moderation. A technology incubator created by Google known as Jigsaw has developed what is known as Perspective API. This helps platforms moderate content by using machine learning models to detect comments that are deemed “toxic”.

Why Content Moderation?

Today’s consumers find user-generated content to be trustworthy and memorable. Far more than any other form of media, and 25% of search results for some of the world’s largest brands are links to user-generated content. Having a comment box and embedded comments is important.

There are risks here. What needs to be looked at is how users portray your brand, and what do you do to protect readers from offensive content? This can also be offensive and personal, too.

The introduction of its Code Of Practice on Disinformation in 2018, has led to numerous high-profile tech companies — Google, Facebook, Twitter, Microsoft, and Mozilla — to provide the Commission with self-assessment reports in early 2019 without any compunction.

Whether it’s videos submitted for a contest, flashy images posted on social media, or comments on blog posts and forums, there’s always a risk that certain user-generated content will be different from what is considered acceptable by the brand you’re representing.

Content Moderation assists in Pattern Recognition

Consider campaigns that are high-volume; it’s all about pattern recognition. By using your moderators to tag content with key properties, your team can gain actionable insights about the behavior and opinions of your users and customers.

A great example would be Listerine mouthwash. A concerted effort was used to study comments posted about its brand online. What Listerine learned is that its customers use it for reasons they’d never thought of — The second most mentioned use for the brand was to kill toenail fungus.

Content Moderation = Search Engine Rankings and Increased Traffic

Using a scalable content moderation strategy can equal more user engagement and better search engine rankings, which in turn leads to more traffic. We discussed this here — What is a Commenting Strategy?

Types of Moderation

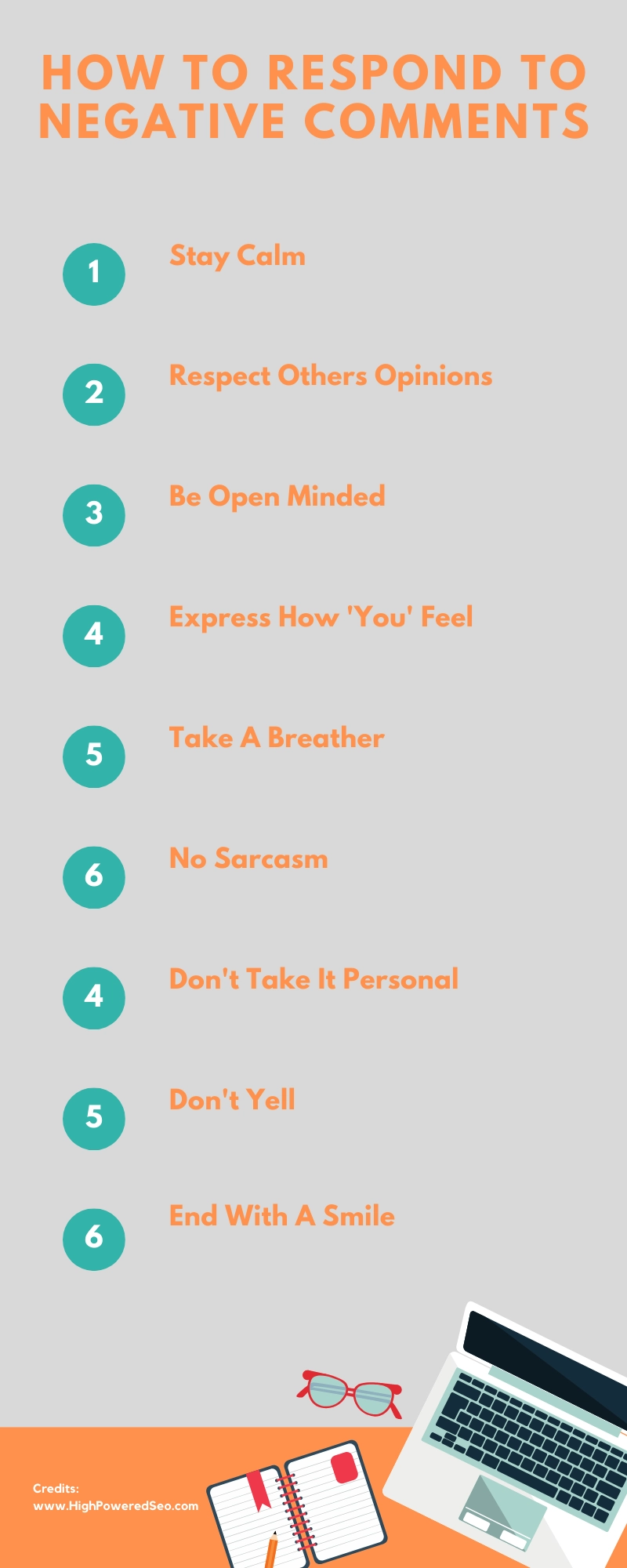

Comment moderation is all about helping you improve the user experience, keep your visitors safe, and as an effect build a good reputation, retain customers and grow from word-of-mouth.

Let’s go through 6 types of content moderation.

1. Manual Premoderation

Manual Pre-moderation is all based on predetermined site guidelines. All user-submitted content is screened before it goes live on your site. Each piece of content is judged by a moderator who takes a decision on whether to publish, reject or edit it.

2. Manual Postmoderation

Manual Post-moderation allows content to go live on your site instantly to then be reviewed by a moderator after it has been published. The moderator will, in the same way as with manual pre-moderation, review each comment and make a call on whether to keep it, edit it or just simply remove it.

3. Reactive Moderation

Reactive moderation relies on your users flagging or reporting content. This can be done through the use of report buttons on your site.

4. Automated Moderation

Automated moderation is popular with sophisticated filters and tools being developed. The most basic version is a filter that catches words from a list and acts on preset rules. Filters are best set up by someone with great knowledge of moderation and industry trends. These filters will require continuous review to ensure that the rules it builds on are up-to-date and accurate.

5. Distributed Moderation

Here you leave the moderation efforts almost entirely to your community. This moderation method relies on rating and voting systems where highly voted content ends up on top of the page and lowly voted content is hidden or removed.

6. No Moderation

This could be the worst thing you could do. No moderation means that the audience would end up in a sense of anarchy, which is certainly not something that’s advisable. Instead of no moderation, you might as well have no comments like Seth Godin decided.

A Conclusion with the Future in Mind

There is no doubt that content moderation will be governed by AI. The only cause for concern is that AI moderation algorithms must adapt to evolving content.

Most current AI approaches rely on training the system with an initial dataset and then deploying the system to make decisions on new content.

As harmful online content evolves, whether that be derogatory terms or phrases or a changing level of acceptability, AI algorithms must be retrained to adapt.

Comments